[ad_1]

Current laptops with Intel Core Ultra Series 2 processors rely on a hybrid chip design that is specifically geared towards energy efficiency. The Neural Processing Unit (NPU), used for the first time in consumer systems, plays a central role here. This dedicated computing unit for AI tasks relieves the CPU and GPU of inference-based processes such as image recognition, language processing, or modelling.

While the CPU had to take on many of these tasks in conventional systems, the NPU enables a significantly more differentiated load distribution. This lowers the average system load and noticeably reduces energy requirements. As many NPU calculations can be carried out at a low clock frequency and in parallel, the energy balance is significantly improved compared to purely CPU- or GPU-based architectures.

Energy-saving components in Intel Core Ultra

The Intel Core Ultra V models in particular combine four performance cores with four efficiency cores and a dedicated NPU to form a tiered computing unit. The P-cores take over performance-critical tasks, while the E-cores and NPU remain continuously active in the background and run routine processes and AI functions with low power requirements.

Mark Hachman / IDG

The integrated Intel Arc Graphics also plays a role in this context: it enables hardware-accelerated video decoding and graphics-intensive display without an additional dedicated GPU, which relieves the cooling system and reduces the overall power consumption. The NPU delivers up to 48 TOPS of computing power with minimal power consumption. This benefits AI applications and AI functions as well as users, as the energy requirements of notebooks can be significantly minimised.

Intel

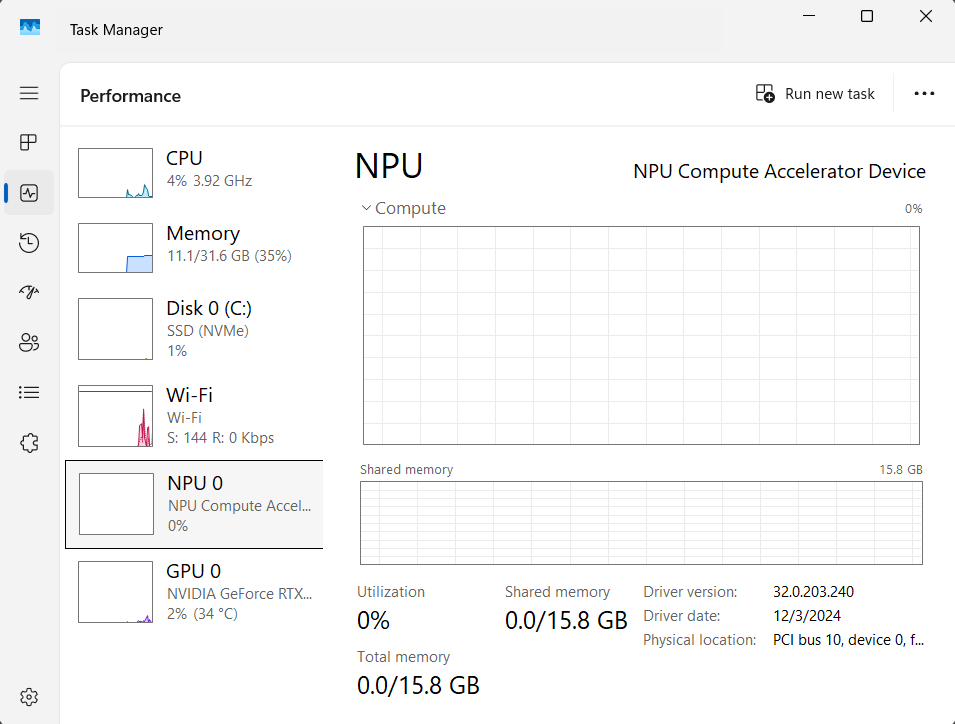

Microsoft’s energy-saving mechanisms under Windows 11

Parallel to the hardware platform, new energy-saving strategies have been implemented with Windows 11. The “User Interaction-Aware CPU Power Management” analyzes user activity in real time. If no interaction via keyboard, mouse, or touchpad is detected, the system automatically throttles CPU performance without interrupting active media playback or presentations. In addition, the “Adaptive Energy Saver” function also activates the energy-saving mode regardless of the battery status, provided the system load and usage scenario allow this.

Sam Singleton

In both cases, the NPU can ensure that AI-supported functions remain active in the background without negatively impacting the energy balance. The AI also balances priorities in the background, for example by delaying cloud synchronization or adaptive process rest.

HP Omnibook and other Copilot models in comparison

Devices such as the HP’s Omnibook X line already integrate these technologies system-wide. In combination with an Intel Core Ultra 7 258V and an Intel Arc 140V GPU, the NPU enables locally executed features such as Windows Studio Effects or AI functions in HP AI Companion without noticeably draining the battery. Many other models also achieve battery runtimes of over 24 hours in mixed operation thanks to the use of NPUs. Models such as the Surface Laptop 6 or the Surface Pro 10 integrate a dedicated NPU directly into the Intel Core Ultra SoC, supplemented by high-performance CPU cores and integrated graphics.

Other compatible devices also rely on the Copilot concept, which combines powerful NPUs with intelligent energy management. Devices such as the Galaxy Book with RTX 4050/4070 or the Surface Pro 10 with Intel Core Ultra 7 demonstrate these possibilities. In practice, this means that even when language translation, background blurring or real-time image optimization are actively used, power consumption remains low.

Software-based optimization and AI offloading

A significant contribution to energy savings is made by shifting compute-intensive workloads to the NPU on the software side. Applications such as Zoom, Adobe Premiere Pro or Amuse are increasingly using native ONNX runtime-based interfaces to offload AI processes such as image generation, object tracking or audio filters to the NPU.

Adobe

This reduces the energy requirements of the CPU, which is particularly noticeable during long periods of use in video conferences or creative applications. The NPU is accessed via standardized interfaces such as DirectML and Intel and AMD platforms, which have native integration into the ONNX runtime. The resulting reduction in load on the main processors makes a decisive contribution to more even load distribution and therefore longer battery life.

Interaction of CPU, GPU, and NPU in practice

In modern notebooks, the CPU, GPU, and NPU work as a dynamic processing trio. While the CPU continues to control the operating system and general applications, the GPU takes over graphics-intensive tasks or parallelized computing operations. The NPU concentrates on dedicated AI processes and enables continuous processing with low energy consumption. Windows 11 assigns these tasks specifically, and continuously evaluates which unit is most efficient for execution.

IDG / Mark Hachman

This means that recurring tasks such as speech transcription, person recognition, or background noise filters can be processed directly on the NPU. This not only lowers power consumption, but also reduces the system temperature, which enables lighter cooling systems and therefore more compact and lighter notebook designs overall.

Local processing instead of cloud offloading

The local execution of AI workloads on the NPU replaces the usual cloud access in many cases. This means that image analyses, language models, or layout suggestions no longer have to be calculated online, but run entirely on the device. This not only reduces latencies, but also avoids unnecessary network activity. This is another factor that reduces power consumption.

At the same time, the availability of these functions is increased even without a network connection, for example on the train or when travelling. Battery life then benefits in two ways: through lower computing load on the CPU and GPU and through reduced Wi-Fi or LTE/5G activity.

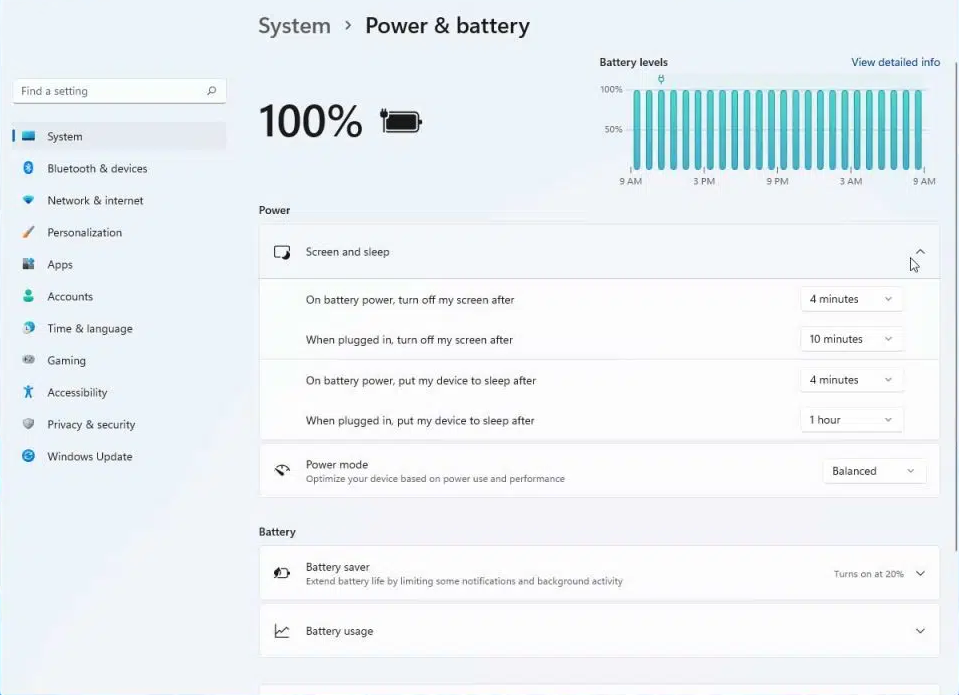

Windows 11 shows NPU utilization in Task Manager for the first time

Microsoft has expanded the Task Manager for control and transparency of this new architecture. In addition to CPU, GPU, and RAM, NPU utilization is now also displayed as a separate measured value. This allows users to understand how much their AI applications are actually benefiting from the dedicated hardware.

For developers, the ONNX runtime in combination with the Windows Performance Analyzer also offers detailed diagnostic functions that can be used to specifically analyze inference times, operator load, and load curves. This enables fine-tuned optimization for maximum energy gain and minimum runtime delay.

Sam Singleton

Battery life as the new benchmark for AI PCs

While attention has long focused on computing power and model size, there is now a paradigm shift. The actual runtime of a device is increasingly becoming the most important quality criterion for AI-optimized notebooks. Modern AI notebooks achieve video playback times of over 26 hours under realistic conditions, a value that would be almost impossible to realize without NPU-supported power distribution.

At the same time, the combination of an adaptive energy-saving mode, local AI offloading, and intelligent load controls opens up new possibilities for mobile applications where the power supply is not always guaranteed.

Conclusion: Saving energy with specialized AI hardware

The integration of NPUs into current notebook platforms not only marks a technological advance in terms of AI performance, but also enables a sustainable reduction in energy consumption through intelligent task sharing for the first time. In combination with the new energy-saving functions of Windows 11, the result is a platform that not only works faster in everyday use, but also noticeably more efficiently. For users, this means longer battery life, less waste heat, quieter systems, and an overall better balance between performance and mobility, without sacrificing modern AI functions.

This article originally appeared on our sister publication PC-WELT and was translated and localized from German.

[ad_2]

Source link